Building My Dream App with Claude Code

I first gave Claude Code a try when it appeared eleven months ago. After coding an RSS app for iOS (which I'm still working on), I realized it had great potential (it can code swift just by me asking it to) and some pretty gnarly drawbacks (its knowledge of Swift is dated, and its context window sucked/still sucks).

Fast forward to the start of this year, and suddenly everyone is talking about how they love Claude Code. This took me by surprise a bit, as I had moved most of my LLM work from Claude to Gemini 3 (mostly due to its superior context window). That said, Claude's models have made some pretty major advancements over the last six months alone, including a larger (but not Gemini-sized) context window, while also allowing Claude Code to directly edit files in XCode.

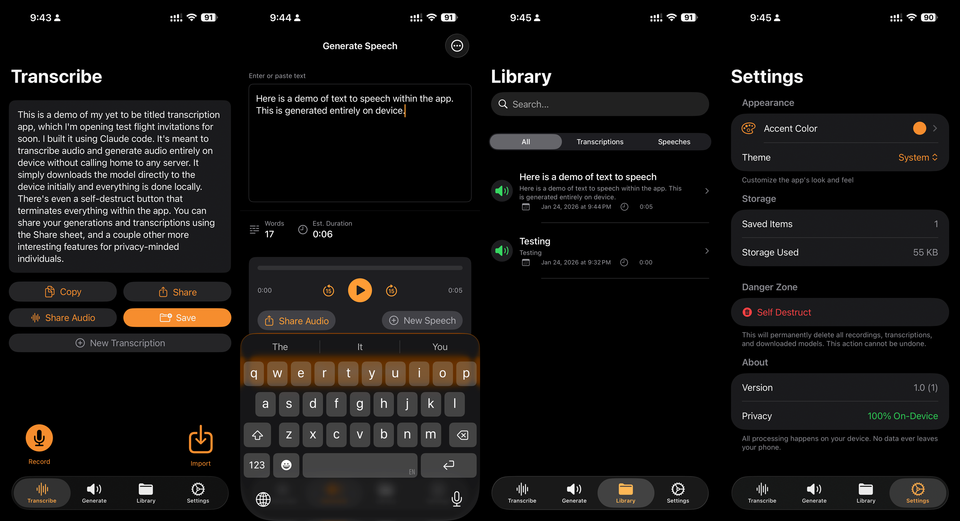

With this in mind, I took an app idea I had and went to work over the past weekend, creating an iOS app that accurately transcribed audio files locally (on-device) and generating spoken audio from text. The aim of the app was to take libraries like OpenAI's Whisper and open-source text-to-speech models, download them to the device, and run them in a private setting. No calling home. No connecting to servers to do any work. Just the same abilities you get with Granola, Gong, or Otter (as well as tools like ElevenLabs Reader), albeit with complete and total user privacy in mind.

And I built it. In a day, no less. Most importantly, I did this with almost no programming experience.

The Worst Computer Science Major Ever

In 2004, I entered school to study computer science. After a semester, I not only hated the work, but I also came to the conclusion that the internet and mobile technology would clearly max out at some point (take that, Moore's Law!) and I would be stuck working on Java applications for Nokias and Blackberries post-grad. (I was 100% right on all of this, naturally.) I took a gap year, got my AA in Film Studies, and got my Bachelor's in English with a minor in Gender Studies. That's how much I wanted to distance myself from studying computer science.

I still kept tabs on the latest technologies and developments in the developer world, despite not being a developer myself. When I entered the working world, I worked with developers, learning over the years how software is developed, planned for, divided in terms of engineering responsibilities, and ultimately shipped. I got to know which libraries and APIs serve specific purposes, how to debug, and how long things actually take compared to how non-engineers want things to work. I could be a pretty decent product manager should I ever want to pursue such a path.

Yet I never pursued any engineering past the HTML and CSS I learned in 1995 and 2003, respectively, and some Javascript I begrudgingly learned in college. (I picked up Python over the last few years thanks to LLMs.) But I could certainly describe in great specificity what I want in an app and troubleshoot my way around problems and bugs when they arose.

Transcribe and Talk (Tentative)

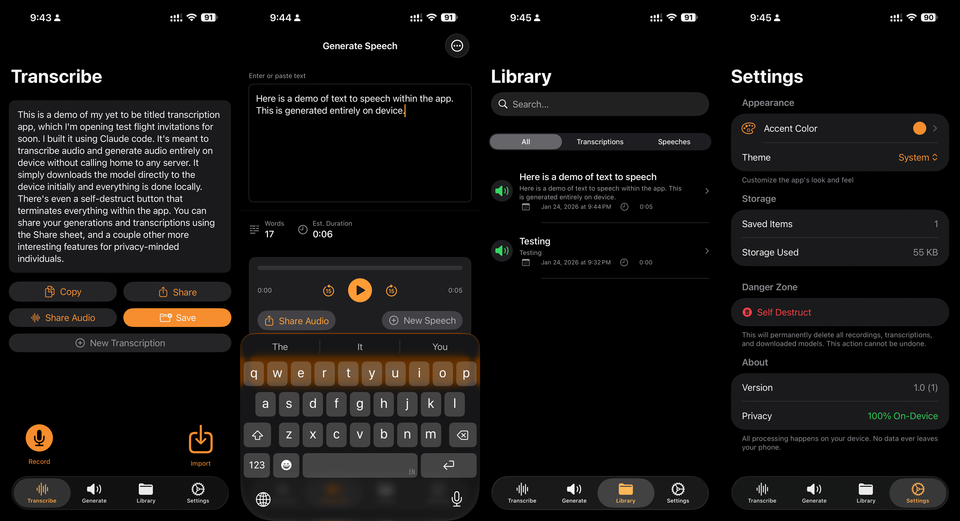

Using the latest edition of Claude Code, already installed in the Claude app on my desktop, I gave it the following prompt:

Using OpenAI's Whisper Turbo and using Eleven Labs' open source, could you make an ios app (using swift) that either records audio or imports audio (via sharesheet) and transcribes it OR allows the user to copy and paste text or import popular documents to turn them into audio. So a transcription audio — speech to text — and giving text a voice — text to speech. All of this should be local. If the app is deleted, everything is deleted. Nothing phones home, and the only interaction the user has is upon first ever launch of the app to download the models locally to the iphone. You can share creations via share sheet, but that's it.

In a few tries with a few following guidance prompts, it made an MVP of this. I was able to build it in Xcode (I have an Apple developer account from my previous attempts) and launch it on my iPhone. Upon further refinements, I will soon have a TestFlight (beta test) for what I'm tentatively calling Transcribe and Talk for iOS devices, which you can sign up for here.

Problems

Transcribe and Talk works. As you see in the screenshot, the Whisper Small model was successfully downloaded to my iPhone 16 Pro Max and transcribed what I spoke into it. Text-to-speech also works, albeit on the internal Apple TTS models, which sound incredibly robotic.

As it turns out, loading Whisper Large Turbo — a better but bigger and more resource intensive transcription model — takes a lot more than simply talking to Claude a few times. As does adding more robust and realistic text-to-speech models. Thus, the app currently has pretty good but not state-of-the-art speech-to-text transcription, and pretty robotic but somewhat quick text-to-speech generation.

It also has, in its first iteration, themes (light and dark mode, as well as an accent color picker), the ability to import documents or audio, export abilities (share your transcripts and generations via Share Sheet), and a button that allows you to blow up the entire app and start again.

Why I'm Building This

It's kind of weird that multi-billion-dollar transcription companies exist when open source models perform just as well for free. I also find it strange that people trust these companies with their most sensitive data in a cloud when what they want can be done entirely on device in the most private way possible.

I built this particularly for people who work with sensitive information, like journalists who want to record an interview and don't trust any of the aforementioned companies. These libraries for text-to-speech and speech-to-text work really well locally. I transcribe anything I record locally using Audio Hijack, which itself uses Whisper and decidedly not things like Gong, because I feel these tools are overly complicated.

Apps that transcribe using Whisper on iOS currently exist. Whisper Notes is a fantastic app that I use for iOS. I simply wish that I could take articles, PDFs, and other documents and have them read to me, which is why I feel the text-to-speech component is important.

In the coming weeks and months, I'll launch Transcribe and Talk — which I'm considering changing to Pretty Good Audio — on the App Store. I aim to build on it by allowing it to record local audio (Zoom audio on a Mac, for instance) shortly after its launch, shaping it into the app I want and selling it for the low price of free (with the option for a small donation to cover the cost of my developer account).

Until then, you can always sign up here to access the app early.

Claude Code is pretty amazing, even and especially for someone like me with limited engineering capabilities. Yet it is not something that you can play with and suddenly find yourself with some kind of app. It's something you need to come to with an idea and shape it into a working product. A lot of friends I showed my app to said they still have yet to figure out which app they'd use Claude Code to create, which is totally understandable. Once they do figure out that idea, Claude Code seems almost fully equipped to build such a thing.

Using Claude Code also made me realize I should probably stop glorifying Gemini for a minute and give my paid Claude subscription another look. I used it for two years straight before Gemini, and still feel Anthropic will be the generative AI company by the end of this year. For now, I have it working out a few bugs, searching for better TTS libraries, and building something I came up with in a paragraph across a few thousands lines of code.

App of the Week: Transcribe and Talk

Self-serving, I know, but you can sign up to access it for free here.

Comments ()